Filament: Overture “High Speed” TPU

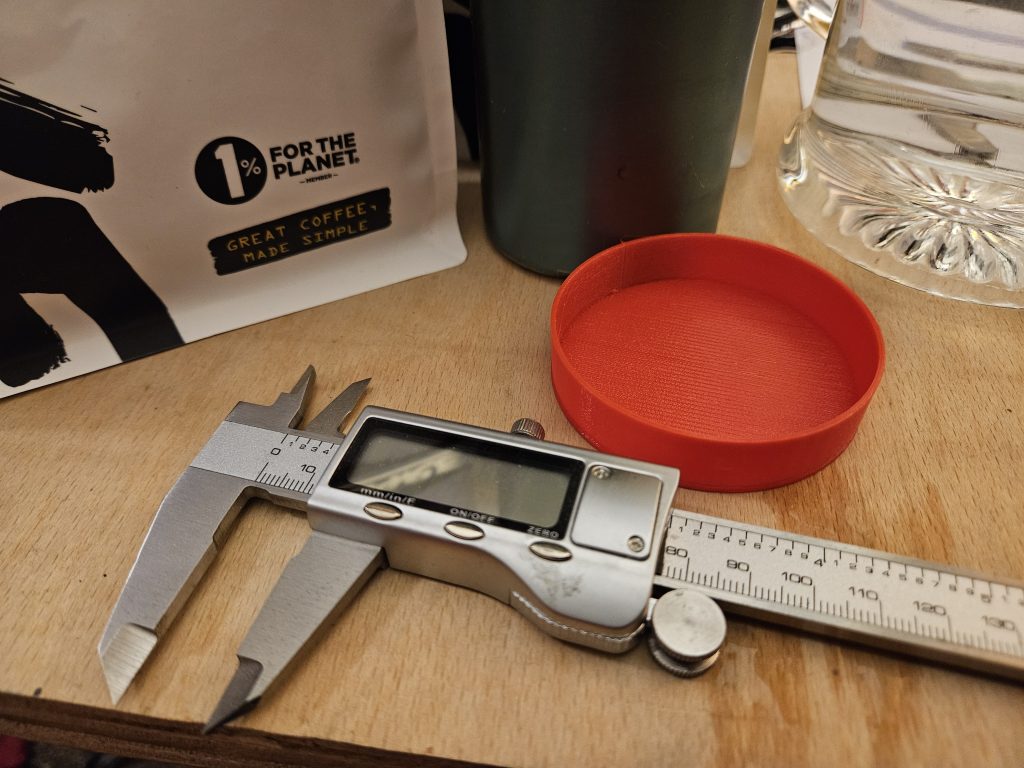

Yesterday I observed Kat draping some random glove liner over her webcam after use. Making a cap for it popped into mind as an obvious thing to do, and the 3d printer was gathering dust – the inspiration hit me. I fired up FreeCAD and knocked up about the most basic of basic 3d designs. I popped some TPU into the dehydrator. Today I lobbed the design over to the desktop, loaded it into the slicer…

A few months ago I tuned the slicer settings for getting some good basic TPU prints out of my printer (A FlashForge Adventurer 3 Pro) – it took a fair bit of fiddling. It’s not a printer that’s really suitable for TPU, especially given it’s a Bowden Tube based model rather than Direct Drive. (The foibles of this printer are a story for another time, but the basic background is I needed to print fire retardant ABS, so needed an enclosed printer, I did my research, decided on this, and it -eventually- did the job I needed. I would not choose or recommend it as a general purpose hobby 3d printer.) Of course I’d made no record of the settings, not so much as a saved FlashPrint profile… I admonished myself accordingly.

Thankfully my memory wasn’t too bad and with only one abortive attempt I got a good enough print. The key thing with printing TPU in this printer is: slowly, slowly, wins the race. Previously I spent loads of time struggling with the material clumping up in the extrusion mechanism. This time around I started slow, low retraction, and using a 0.6mm nozzle helps a lot too.

In terms of the settings in FlashPrint (the slicer that goes with this printer, I’ve never really got around to trying another slicer) I started with the “standard” 0.6mm PLA settings in FlashPrint 5.8.5 and adjusted these settings:

Printer

Extruder Temperature: 225C

Bed Temperature: 45C

General

Layer Height: 0.30mm

First Layer Height: 0.30mm (the default)

Base Print Speed: 20mm/s

Retraction Length: 2.0mm

Retract Speed: 10mm/s

Infill

Top Solid Layers: 4

Bottom Solid Layers: 4

Raft

Enable Raft: No

Cooling

Cooling Fan Control: Always Off

Advanced

First Layer Extrusion Ratio: 100%

Others

Z Offset: 0.05mm (entirely dependent on your calibration!)

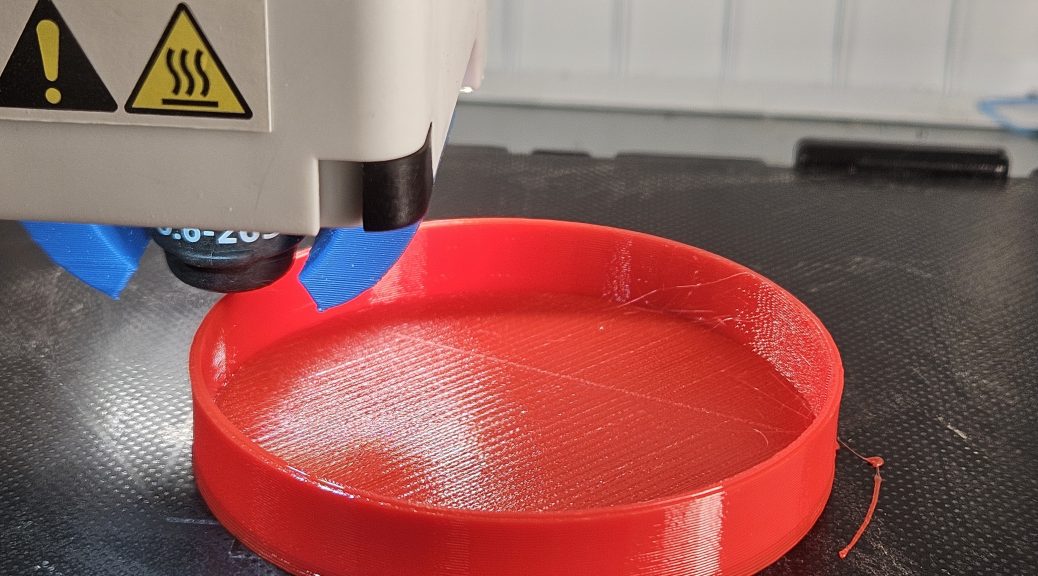

Getting the z-height is very important of course, perhaps more important with TPU than other filaments in my experience. The reason being that whole jamming extruder problem. Too close and most filaments just “click” and skip a bit, but TPU bunches up, jams, and it all goes wrong; too far and you’ve got no adhesion! And the margin between these two is narrow. I suggest printing a base pad as a test to get it calibrated right. Every time I change filament or do my first print after a hiatus I use the printer’s bed calibration function with the bed and nozzle preheated to match the print settings, setting it up so it just lightly clamps a bit of 80gsm printer paper. Then I fiddle with the z-offset adjustment in the slicer to make it work, today the ideal seemed to be a +0.05 z-height adjustment. Tomorrow it could be different!

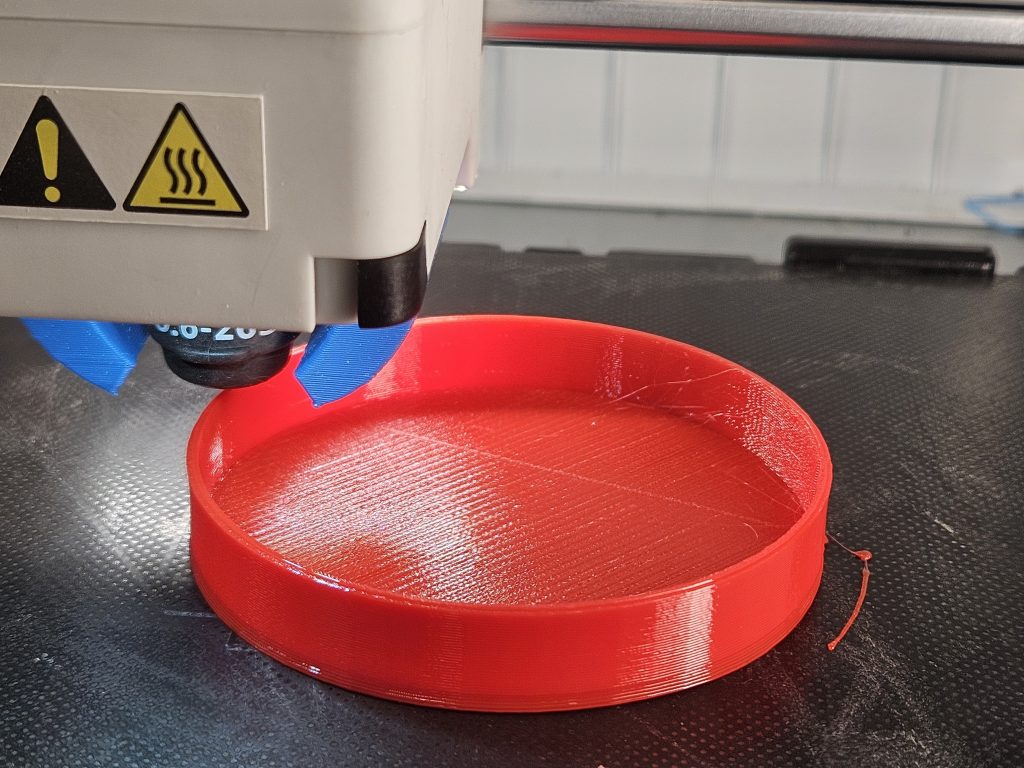

For the small cap I watched the extrusion mechanism like hawk – if you catch it quick and pull it back a bit you can save the print. It printed with zero intervention. So I was more laid back about the larger cap print… and it also printed without intervention. So it might be possible to push that print speed up a little in future, but I probably wouldn’t bother.

On these settings the small (28mm outer dia) cap was a mere 7 minute print, and the larger (68mm outer dia) one was a whopping 1hr 40min print! Noting that the small cap had a 1mm thick base and 1 shell thick side, and the larger one a much beefier 2mm thick base and 2 shell thick side.

I would in general recommend keeping TPU printing to a minimum with this specific printer. Small simple objects… though I did print a small squishy mesh cat one time and that worked. Mainly the reason is because the print speed is so slow… if it goes wrong in a long print you’ve got a lot of time wasted. If you want to print a lot of TPU then it seems getting a direct drive extruder is the key recommendation. (It’s on my wishlist!)